Detecting Screen Capture in macOS and iOS

- #Security

• 7 min read

Introduction

In this article, we will try 2 different approaches to find a way to detect if a Mac screen is being captured. The first approach is to investigate and use existing implementations or their underlying low-level code. The second approach is to intercept system events already implemented in macOS, like the Console logs.

UIScreen and it's screen capturing methods

Since iOS 4, the UIScreen API has been available, and it is precisely the solution we need. We have checked the Catalyst analog on macOS Big Sur, and it shows great results.

Get capturing state:

UIScreen.main.isCaptured

Get capturing updates:

UIScreen.capturedDidChangeNotification

Reverse engineering UIScreen methods

Catalyst is good, but what if we have a plain Cocoa AppKit app? We need to find a more cross-platform-ish way. And our idea is if UIScreen methods work fine both on iOS and macOS, then it is probably built on top of some other functions that are native to both platforms. Let's find out what those are.

The first thing that comes to mind is to look at UIScreen methods in the Hopper disassembler, and we'll do exactly that.

To start, we'll find a framework to disassemble.

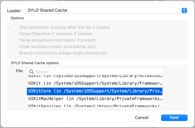

As of macOS Big Sur, all system libraries are now located in a single big cache.

Thus, we go to the /System/Library/dyld folder and drop dyld_shared_cache_x86_64 onto Hopper.

Thankfully, Hopper knows how to work with shared caches and lets us pick a needed slice.

The UIKitCore.framework is the one we want now.

After Hopper finishes its background analysis, we can search procedures for isCaptured.

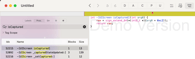

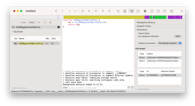

Hopper successfully finds -[UIScreen isCaptured], but when we click on it, we can find that it's not much of a help for us (note that in the screenshot below Hopper's Display Mode is set to pseudo-code mode).

But we also see during this search that there are other related UIScreen methods. Browsing code in Hopper manually and using different search terms is one of the most effective ways to find something interesting.

As we now have more info about UIScreen internals, we can apply a different approach to diversify our investigation, for example, using breakpoints.

Let's set a symbolic breakpoint on -[UIScreen _setCaptured:] and see what happens when we start capturing.

We will use Slack for testing, but other apps with capturing functionality should work fine.

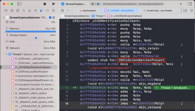

When recording starts and Xcode stops on the breakpoint, we can navigate all methods and stack traces participating in the call.

Mostly there is assembly code, but some symbols or strings may be interesting for us to focus on.

For example, the CGSIsScreenWatcherPresent by its name looks exactly like what we need.

The literal CGSIsScreenWatcherPresent is a part of CGSInternal, a set of private methods enthusiasts exposed to the public.

It has a lot of interesting methods that are not available otherwise.

These headers originated in CoreGraphicsServices and then migrated to SkyLight framework.

Getting notified when capturing starts/ends

CGSIsScreenWatcherPresent works just fine in a plain Cocoa AppKit app.

But we'd also like to know when capturing starts/ends so we don't have to poll.

UIScreen has capturedDidChangeNotification, so there should probably be its low-level counterpart.

By investigating more using Hopper and breakpoints methods, we can find more relevant keywords, like CGSPostLocalNotification and CGSNotifications, which lead us to ForceQuitUnresponsiveApps repo that demonstrates how to use CGSInternal to detect when apps become unresponsive.

Its author uses CGSRegisterNotifyProc to subscribe to kCGSNotificationAppUnresponsive, which looks suspiciously similar to what we seek.

But how do we find the code to subscribe to?

Thankfully, there is a result when we search CGSRegisterNotifyProc in UIKitCore.framework using Hopper.

And thankfully, Hopper has call graph functionality that lets us see places where calls to function are made.

There are only 2 callers, so it should be easy.

Both are in -[UIScreen initWithDisplayConfiguration:forceMainScreen:], which makes sense; usually we subscribe to notifications somewhere around init.

CGSRegisterNotifyProc(sub_7ff90ee3fafc, 0x5de, r14)

CGSRegisterNotifyProc(sub_7ff90ee3fafc, 0x5df, r14, @selector(_handleForcedUserInterfaceLayoutDirectionChanged:), *qword_value_140708518685824, 0x0)

Both calls take sub_7ff90ee3fafc function as the first argument.

From the name, it's unclear what this function does, but when we double-click on this function to open it, we can see that this is the function that updates the "captured" state:

void sub_7ff90ee3fafc(int arg0, int arg1, int arg2, int arg3) {

rbx = [arg3 retain];

[rbx _capturedStateUpdated:CGSIsScreenWatcherPresent() & 0xff];

[rbx release];

return;

}

The codes used in calls are 0x5deand 0x5df.

By converting to decimal, we get 1502 and 1503, respectively.

Looking at CGSEventType in CGSEvent.h, we can find such lines.

kCGSessionRemoteConnect = 1502

kCGSessionRemoteDisconnect = 1503

It works as needed, and we can observe both capture start and end by using these codes.

However, there is a problem: when the CGSNotifyProcPtr procedure is called with these codes, no data is passed, so there is no way to detect which app exactly is doing the capture.

The names of codes also imply that CGS functionality (like the Window Server) sees these events as "remote session" events and is probably not interested in the nature of the capture in this case.

Inspecting Logs

There is an open-source app OverSight that monitors the microphone and the camera of a Mac, alerting the user when the internal microphone is activated or whenever a process accesses the camera.

It detects these events by looking at log messages (for instance, in Console).

It is possible by using private LoggingSupport.framework.

OSLogEventLiveStream class even has helper filtering methods like setFilterPredicate: to get only specific log messages.

For example, to know when the camera starts and stops on an Intel machine, the log monitor looks for events from the process VDCAssistant, and messages like: "Client Connect for PID", "GetDevicesState for client", "StartStream for client" and such.

We can extend our approach and see what happens in the Console when the screen is captured.

By looking at what happens when we select QuickTime - New Screen Capture - Record Entire Screen, we can see that some processes and messages can be used to detect when recording starts, for example, screencapture and screencaptureui.

Here are some key events that indicate capturing started; we can use them to find the places of interest in the logs:

Subsystem: com.apple.TCC

Category: access

Process: screencapture

Message: SEND: 0/7 asynchronous to com.apple.tccd.system: request: msgID=79710.2, function=TCCAccessRequest, service=kTCCServicePostEvent,

Subsystem: com.apple.screencapture

Category: default

Process: screencaptureui

Message: Toolbar video screenshot pressed

Process: screencapture

Message: Capturing video

Subsystem: com.apple.screencapture

Category: recording

Process: screencapture

Message: Setup recording

filename: 27B2D7E7-9F3C-4E96-A995-C8D3ACAB74FE.mov

display: 69733382,

rect: nil

Not all capturers post logs

We tried Slack, and it didn't post any logs regarding screen capturing or anything from the list above. Slack is an Electron app, so it probably uses desktopCapturer under the hood.

It looks like it's a different area to investigate; we decided not to invest that much effort at the moment.

Conclusion

For iOS and Catalyst apps, screen capturing detection is possible via the UIScreen API.

https://developer.apple.com/documentation/uikit/uiscreen/2921651-iscaptured

It works well on macOS and handles screen capturing and sharing cases.

For a plain Cocoa AppKit app, CGSInternal comes to help with its CGSIsScreenWatcherPresent and CGSRegisterNotifyProc procedures.

Core Graphics has the Quartz Display Services API collection that has "Capturing and Releasing Displays" functions, for example, the deprecated CGDisplayIsCaptured.

But it seems like "capturing" has a different notion in this context — meaning "a process that fully captured screen", so just describing the case of full-screen processes like games.

Potential integrations

- Hide any sensitive information during screen capturing/sharing. (Security/Privacy)

- Propose to close any other traffic-consuming apps to have video calls stable. (Utility)

- Close all unnecessary windows during video calls. (Cleaning)

- Notify users about unwanted screen captures. (Security/Privacy)