AI Opportunities in Operating Systems

- #Artificial Intelligence

• 8 min read

Understanding AIOS

Today, AI is everywhere, and you won't surprise anyone with one more AI assistant not only answering questions but also being able to take some actions for you. Moreover, assistants are supposed to offer tasks completions and suggestions even before you ask them. On the one hand, assistants are just programs; on the other - agents, that is, besides doing some tasks, they can behave adaptively and react to environmental changes, providing the best result under the given conditions.

And what about operating system (OS)? Being the platform for running software, it is also software itself and, consequently, could be an AI-boosted agent.

According to modern understanding, AI is composed of the following six disciplines:

- Natural language processing (NLP) communicating in human language

- Knowledge representation storing what it knows

- Automated reasoning focusing on developing algorithms and systems capable of making logical inferences and drawing conclusions automatically

- Machine learning (ML) acquiring knowledge from data and adapting to new circumstances

- Computer vision and Speech recognition perceiving the world

- Robotics performing physical tasks in the real world

Thus, the AI operating system should be a combination of the above features and still do the needed OS tasks, like managing hardware and software resources and providing services for them.

Searching for information about AI operating systems reveals two possible approaches to treating this term: OS with at least one AI agent or some well-optimized platform for running AI algorithms efficiently. The topic of our interest is OS, the main features of which are managed by AI. In other words, we're interested in AI running the OS rather than OS running the AI.

This investigation was inspired by the relatively recent paper 'AI bases OS', in which the idea of an AI operating system (AIOS) is raised with the following main features:

- Monitoring and optimizing its own performance

- Smart process and memory management

- Predicting results

- Using the right resources

- Making sure that resources are available by predicting before a process asks for it

- Suggesting alternative options to a problem

- Search internally and externally for information to help themselves

- Deriving a solution to problem by itself

- Self-healing and getting immune to viruses after attack

- Communicating to other OS.

Current State of AIOS

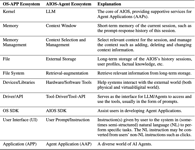

One of the latest results on the topic proposes the idea of a large language model (LLM) as OS. Relying on the most recent advancements in AI, especially the capabilities of LLMs, the authors introduce the concept of the LLMOS - the operating system with the LLM 'soul' and argue its relevance by establishing analogies with the traditional OS.

In particular, sharing the LLM backbone between multiple users and processes requires scheduling much like CPU management; handling the limitations of the model's context window corresponds to memory management, and accessing the external data storage - to storage management. Agents could play the role of applications, libraries and SDKs, natural language prompts correspond to system calls, and some pre-defined prompts provide an analogy for the standard CLI commands in traditional OS. GUI will convert different user interactions into prompts understandable by LLM.

LLM can also learn from the feedback of its actions (reinforcement learning) or the examples (supervised learning) and, thus, permanently improve, similar to how the kernel is updated based on bug reports and performance issues.

A somewhat more concrete theorization on the topic is F4OS. The authors insist on using a foundation OS model (F4OS) capable of managing all of the OS responsibilities instead of using existing partial task-specific AI solutions: the strong inter-dependence of OS components implies the non-optimality of fine-tuning them separately. In particular, the approach relies on the fact that every modern OS collects the logs from its components, hardware, applications, etc., thus automatically providing not only the training data but potentially capturing the relationships between OS processes. As an example, the case of scheduling and cache replacement tasks is considered. Along with the OS traces for each of them, there are naturally gathered traces of their relationship since, for example, the process completion times depend on resources and, particularly, cache.

The foundation model approach is also actively popularized and investigated in the Cloud Systems field. Such systems can be compared to OS in complexity and have many similar tasks; consequently, the ideas can be potentially reused and shared.

Although the ideas of the universal (foundation) model, controlling and managing OS responsibilities sound great, they are mostly just theoretical envisions rather than concrete implementations. The applications of AI to single tasks of OS, such as network managing, memory managing, CPU scheduling, etc., seem ‘more alive’ and have, if not production-ready implementations, at least working prototypes.

Many such results are related to tuning some OS parameters. For example, storage components in OS work with heuristic algorithms and allow tuning some of their parameters via system calls. There is the prototype of the ML framework for the memory management system’s performance improvement. In particular, it solves the problem of adaptive setting the heuristics values for readahead. Readahead is a technique that reduces the latency associated with accessing data from storage by bringing the required information into the system's memory before an application or process actually requests it. Obviously, its effectiveness is highly dependent on the nature of the workload and access patterns, making it and similar tasks perfect candidates for applying AI-based solutions.

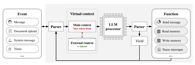

Also, there are investigations solving the potential problems of the LLM-based OS. The very obvious one is context window limitation (the constraint on the number of preceding words or tokens that the model considers when predicting the next word in a sequence), which must play the 'Memory' role in such OS. The proposed solution in the form of virtual context management is inspired by the hierarchical memory structure in traditional OS. The LLM is complemented with 'LLM OS', providing it with the illusion of unbounded context: by analogy with a traditional OS, there is the main context corresponding to main memory, RAM, and external - corresponding to disk storage, and function calls allowing to move data between them. The LLM processor takes the main context and outputs result parsed to a yield or function call.

Conclusions

For now, the AIOS is definitely a promising direction for making theoretical and scientific investigations. However, the concrete realization remains an open question and seems to be too far from being answered: the recent publications and conference talks state only envisions of such systems. Most of them are based on the LLMs capabilities and assume natural language as a key communication channel in user-machine interaction.

Obviously, there are a lot of potential risks of using such systems. One of the main shortcomings of LLMs is their 'black-boxiness' - it is hard to predict and control their intrinsic state and behaviour. In some sense, giving the model full control over the OS could be analogous to putting a self-driving car on the road without a driver. Thus, it seems like some additional external control still may be needed.

All the concepts suggest that AIOS should be highly customizable for a concrete user by permanent training itself, which is indeed a must feature. But will it be able to re-train itself quickly enough once the user behaviour changes drastically? Additionally, training huge and powerful models usually requires a lot of specific resources; it is not clear if the usual machine could manage it.

Although most of the ideas rely on the concept of OS as a general artificial intelligence and seem to be unachievable yet, integrating AI solutions for some specific tasks definitely worth considering. As for now, the agents are already successful in APIs manipulation; accessing the internet resources; writing and executing code, testing and finding system issues like race conditions; simulating behaviour; etc. The main challenges are adaptivity to environmental changes and enough data. The first can be ensured with Machine learning, especially Reinforcement learning methods. The need for training data is also covered by using OS traces simulated by running automated common usage workflows.