How to Improve Request Execution With Redis Cache

- #Redis

- #PostgreSQL

• 3 min read

After the major release of our ClearVPN apps, I decided to share some insights regarding request execution, one of the major problems I faced during the development process.

As expected, cache implementation can greatly improve request processing, but it was fascinating to see just how much Redis caching could improve the numbers.

Our build

We use gRPC as our primary transport for app communication with the back end - a fantastic framework. For database management, we use PostgreSQL with PgBouncer as a connection pooler.

Initial testing

With the help of DevOps, the team conducted load testing for all gRPC endpoints prior to the release. We used a simple ghz tool with the following configuration:

{

"call": “GetDashboardInfo”,

"total": 200,

"concurrency": 50,

"connections": 1,

"timeout": 20000000000,

"dial-timeout": 10000000000,

"CPUs": 8

}

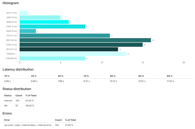

The results were disappointing, especially for the “heaviest” endpoints. Look at the key latency metrics for the endpoint picking all the shortcut and configuration nodes for the app.

Diagram

An average response time of 9 seconds and a 51% timeout rate for requests are far from an ideal outcome.

It sucks :(

However, we've made some progress in PostgreSQL queries achieving fewer db connections. While far from a definitive improvement, it was still a positive development.

Here comes the solution

Without any doubt, caching was the solution. We could easily generate a cache key with country and appID keys.

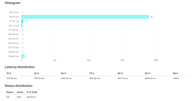

After implementing Redis cache, we measured the results using the same configuration of 50 concurrent workers and 200 total requests.

Diagram

Amazing, outstanding, magnificent!

Invalidation

It’s necessary to invalidate all shortcuts cache on any CRUD (create, read, update, delete) operation on the Node or Shortcut entity. This applies to any manual change via the admin panel.

There’s a catch, however. If you want to delete all keys matching the pattern, you have to run this command:

redis-cli --scan --pattern 'xyz_category_fpc*' | xargs redis-cli del

Which brings us to these steps:

- Get the result of “KEYS shortcuts:*”

- Iterate the result and delete records one by one.

Not the most effortless experience.

Fortunately, Redis has UNLIK, an async delete command making the whole process more feasible. In another performance-oriented method, the cache can be invalidated by a single key (e.g., account subscription).

If invalidating the cache is impossible, we still have time-to-live (TTL) as a fallback option. In the worst-case scenario, there would be an outdated shortcut for another 2 hours, which is not the end of the world.

Conclusions

In SaaS applications, database connections are always the bottleneck. Redis caching can be an incredible tool for improving performance in complex business logic and db queries with multiple joins. But, invalidation is challenging in some cases, so you should always work out negative scenarios.